给手头上的一台独服新上一块硬盘并设置软raid1作备份,原先并没有设置软raid。

百度上的教程都是完全复制粘贴没找到一步到位的。

Google了会并搞定了,记录下。

由于涉及到数据问题,强烈建议先自己本地开个虚拟机测试成功了再转移到真正需要操作的环境。

参考资料:https://www.howtoforge.com/how-to-set-up-software-raid1-on-a-running-lvm-system-incl-grub-configuration-centos-5.3 和 Google

测试的系统为centos7,使用grub2.

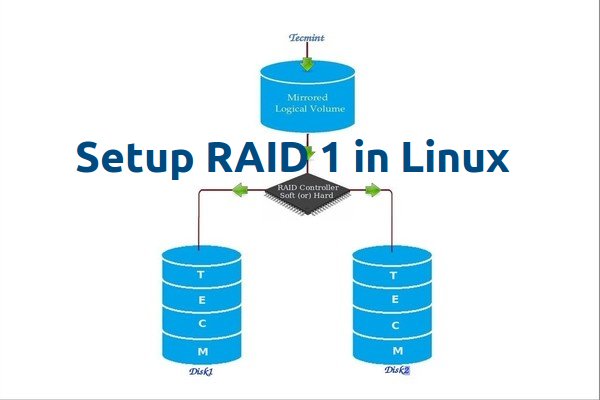

设置raid前的系统分区环境:

- /dev/sda1: /boot partition, ext4;

- /dev/sda2: Linux LVM 包含根目录

- /dev/sdb: 未分区的新硬盘

设置完raid理想分区环境是:

- /dev/md0 (made up of /dev/sda1 and /dev/sdb1): /boot partition, ext4;

- /dev/md1 (made up of /dev/sda2 and /dev/sdb2): LVM , 包含 /

df -h[root@server1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

8.6G 1.4G 6.8G 17% /

/dev/sda1 99M 13M 82M 14% /boot

tmpfs 250M 0 250M 0% /dev/shm

[root@server1 ~]#

fdisk -l[root@server1 ~]# fdisk -l

Disk /dev/sda: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1305 10377990 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/sdb doesn't contain a valid partition table

[root@server1 ~]#

pvdisplay[root@server1 ~]# pvdisplay

/dev/hdc: open failed: No medium found

--- Physical volume ---

PV Name /dev/sda2

VG Name VolGroup00

PV Size 9.90 GB / not usable 22.76 MB

Allocatable yes (but full)

PE Size (KByte) 32768

Total PE 316

Free PE 0

Allocated PE 316

PV UUID aikFEP-FB15-nB9C-Nfq0-eGMG-hQid-GOsDuj

[root@server1 ~]#

vgdisplay[root@server1 ~]# vgdisplay

/dev/hdc: open failed: No medium found

--- Volume group ---

VG Name VolGroup00

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size 9.88 GB

PE Size 32.00 MB

Total PE 316

Alloc PE / Size 316 / 9.88 GB

Free PE / Size 0 / 0

VG UUID ZPvC10-cN09-fI0S-Vc8l-vOuZ-wM6F-tlz0Mj

[root@server1 ~]#

lvdisplay请记下当前系统使用的VG Name(卷组名)和 LV name(逻辑卷名)

[root@server1 ~]# lvdisplay

/dev/hdc: open failed: No medium found

--- Logical volume ---

LV Name /dev/VolGroup00/LogVol00

VG Name VolGroup00

LV UUID vYlky0-Ymx4-PNeK-FTpk-qxvm-PmoZ-3vcNTd

LV Write Access read/write

LV Status available

# open 1

LV Size 8.88 GB

Current LE 284

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Name /dev/VolGroup00/LogVol01

VG Name VolGroup00

LV UUID Ml9MMN-DcOA-Lb6V-kWPU-h6IK-P0ww-Gp9vd2

LV Write Access read/write

LV Status available

# open 1

LV Size 1.00 GB

Current LE 32

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

[root@server1 ~]#

现在开始安装mdadm

yum install mdadmmodprobe linear

modprobe multipath

modprobe raid0

modprobe raid1

modprobe raid5

modprobe raid6

modprobe raid10干完这些,运行:

cat /proc/mdstat输出大致应该是这样子的:

[root@server1 ~]# cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

unused devices: <none>

[root@server1 ~]#

开始处理新硬盘sdb

我们需要将旧硬盘sda里所有的数据全部转移到新硬盘sdb里

首先,我们需要将旧硬盘的分区表复制到新硬盘里。

sfdisk -d /dev/sda | sfdisk /dev/sdb输出大致为:

[root@server1 ~]# sfdisk -d /dev/sda | sfdisk /dev/sdb

Checking that no-one is using this disk right now ...

OK

Disk /dev/sdb: 1305 cylinders, 255 heads, 63 sectors/track

sfdisk: ERROR: sector 0 does not have an msdos signature

/dev/sdb: unrecognized partition table type

Old situation:

No partitions found

New situation:

Units = sectors of 512 bytes, counting from 0

Device Boot Start End #sectors Id System

/dev/sdb1 * 63 208844 208782 83 Linux

/dev/sdb2 208845 20964824 20755980 8e Linux LVM

/dev/sdb3 0 - 0 0 Empty

/dev/sdb4 0 - 0 0 Empty

Successfully wrote the new partition table

Re-reading the partition table ...

If you created or changed a DOS partition, /dev/foo7, say, then use dd(1)

to zero the first 512 bytes: dd if=/dev/zero of=/dev/foo7 bs=512 count=1

(See fdisk(8).)

[root@server1 ~]#

然后使用fdisk -l 确认现在的分区表:

root@server1 ~]# fdisk -l

Disk /dev/sda: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1305 10377990 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 * 1 13 104391 83 Linux

/dev/sdb2 14 1305 10377990 8e Linux LVM

[root@server1 ~]#

此时sda和sdb应有相同的分区表。

然后,我们需要把sdb上的分区变成 Linux raid autodetect类型

fdisk /dev/sdb[root@server1 ~]# fdisk /dev/sdb

The number of cylinders for this disk is set to 1305.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): <-- t (输入t)

Partition number (1-4): <-- 1 (输入1)

Hex code (type L to list codes): <-- fd (输入fd)

Changed system type of partition 1 to fd (Linux raid autodetect)

Command (m for help): <-- t (输入t)

Partition number (1-4): <-- 2 (输入2)

Hex code (type L to list codes): <-- fd (输入fd)

Changed system type of partition 2 to fd (Linux raid autodetect)

Command (m for help): <-- w (输入w)#保存

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@server1 ~]#fdisk -l确认sdb上的分区都为Linux raid autodetect

[root@server1 ~]# fdisk -l

Disk /dev/sda: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1305 10377990 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 * 1 13 104391 fd Linux raid autodetect

/dev/sdb2 14 1305 10377990 fd Linux raid autodetect

[root@server1 ~]#然后,我们需要清除硬盘上以前的raid记录:

mdadm --zero-superblock /dev/sdb1

mdadm --zero-superblock /dev/sdb2如果硬盘上以前没有raid记录的话输出大概是():

[root@server1 ~]# mdadm --zero-superblock /dev/sdb1

mdadm: Unrecognised md component device - /dev/sdb1

[root@server1 ~]#

这是正常的不用担心。如果有记录的话应该是什么都不显示。

现在,我们就可以开始创建 RAID 阵列 /dev/md0 和 /dev/md1. /dev/sdb1 将会被包含进 /dev/md0 ,/dev/sdb2 会被包含进 /dev/md1. /dev/sda1 和 /dev/sda2现在还不能被添加 (数据还没移!!!), 所以,我们现在用missing 来代替它们:

mdadm --create /dev/md0 --level=1 --raid-disks=2 missing /dev/sdb1

mdadm --create /dev/md1 --level=1 --raid-disks=2 missing /dev/sdb2查看刚刚创建的raid

cat /proc/mdstat[root@server1 ~]# cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sdb2[1]

10377920 blocks [2/1] [_U]

md0 : active raid1 sdb1[1]

104320 blocks [2/1] [_U]

由于md0会被用作/boot,所以我们需要把它格式化成与当前/boot相同的格式。这里是ext4

mkfs.ext4 /dev/md0由于md1会被用作LVM, 我们需要把它格式化成物理卷

pvcreate /dev/md1然后,我们需要把 /dev/md1 添加到现有的卷组 VolGroup00里:

vgextend VolGroup00 /dev/md1查看当前卷组情况:

pvdisplay输出大致为:

[root@server1 ~]# pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name VolGroup00

PV Size 9.90 GB / not usable 22.76 MB

Allocatable yes (but full)

PE Size (KByte) 32768

Total PE 316

Free PE 0

Allocated PE 316

PV UUID aikFEP-FB15-nB9C-Nfq0-eGMG-hQid-GOsDuj

--- Physical volume ---

PV Name /dev/md1

VG Name VolGroup00

PV Size 9.90 GB / not usable 22.69 MB

Allocatable yes

PE Size (KByte) 32768

Total PE 316

Free PE 316

Allocated PE 0

PV UUID u6IZfM-5Zj8-kFaG-YN8K-kjAd-3Kfv-0oYk7J

[root@server1 ~]#vgdisplay输出大致为:

[root@server1 ~]# vgdisplay

--- Volume group ---

VG Name VolGroup00

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 2

Act PV 2

VG Size 19.75 GB

PE Size 32.00 MB

Total PE 632

Alloc PE / Size 316 / 9.88 GB

Free PE / Size 316 / 9.88 GB

VG UUID ZPvC10-cN09-fI0S-Vc8l-vOuZ-wM6F-tlz0Mj

[root@server1 ~]#现在,我们需要创建新的/etc/mdadm.conf

mdadm --verbose --detail -scan > /etc/mdadm.conf

查看madadm.conf

cat /etc/mdadm.conf输出大致为(很可能有区别,uuid每个人都不一样):

ARRAY /dev/md0 level=raid1 num-devices=2 UUID=0a96be0f:bf0f4631:a910285b:0f337164

ARRAY /dev/md1 level=raid1 num-devices=2 UUID=f9e691e2:8d25d314:40f42444:7dbe1da1现在,我们可以把现有的逻辑卷数据复制到sdb2上了:

pvmove /dev/sda2 /dev/md1然后,把sda2移除当前使用的卷组:

vgreduce VolGroup00 /dev/sda2pvremove /dev/sda2现在的物理卷情况:

pvdisplay[root@server1 ~]# pvdisplay

--- Physical volume ---

PV Name /dev/md1

VG Name VolGroup00

PV Size 9.90 GB / not usable 22.69 MB

Allocatable yes (but full)

PE Size (KByte) 32768

Total PE 316

Free PE 0

Allocated PE 316

PV UUID u6IZfM-5Zj8-kFaG-YN8K-kjAd-3Kfv-0oYk7J

[root@server1 ~]#然后,我们就可以把sda2和sdb2合并,变成raid1阵列md1

fdisk /dev/sda[root@server1 ~]# fdisk /dev/sda

The number of cylinders for this disk is set to 1305.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): <-- t (输入t)

Partition number (1-4): <-- 2 (输入2)

Hex code (type L to list codes): <-- fd (输入fd)

Changed system type of partition 2 to fd (Linux raid autodetect)

Command (m for help): <-- w (输入w)#保存

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table.

The new table will be used at the next reboot.

Syncing disks.

[root@server1 ~]#mdadm --add /dev/md1 /dev/sda2现在,我们可以查看当前阵列信息:

cat /proc/mdstat[root@server1 ~]# cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sda2[2] sdb2[1]

10377920 blocks [2/1] [_U]

[====>................] recovery = 23.4% (2436544/10377920) finish=2.0min speed=64332K/sec

md0 : active raid1 sdb1[1]

104320 blocks [2/1] [_U]

unused devices: <none>

[root@server1 ~]#可以看到系统正在自动把sda2和sdb2的数据同步,可能需要相当久的一段时间

可以使用

watch cat /proc/mdstat来实时监视进度

同步完成后, 我们需要建立新的initramfs来确保系统可以正常启动。

这里使用的是grub2,grub或者extlinux请参考grub2并自行根据情况修改/boot里面各种的配置文件并安装。

首先运行

mdadm --verbose --detail -scan记下当前所有md设备的uuid

然后修改

/etc/default/grub在GRUB_CMDLINE_LINUX行里添加

rd.md.uuid="md0的uuid(删掉双引号)" rd.md.uuid="md1的uuid(删掉双引号)" 保存退出后运行

grub2-mkconfig -o /boot/grub2/grub.cfg然后运行

dracut --regenerate-all -fv --mdadmconf --fstab --add=mdraid --add-driver="raid1 raid10 raid456"完成后再重新给两个硬盘安装grub2

grub2-install /dev/sda

grub2-install /dev/sdb然后就可以运行reboot重启了

正常来说重启正常

如果重启后发现系统无法自动组合raid设备为md*的话可以等待超时后initramfs命令行里运行

mdadm --assemble --scan --run --auto=yes

lvm vgchange -ay

exit来手动组合raid设备为md*并识别lvm然后手动启动。

重启正常后,可以着手处理md0了。

mkdir /mnt/md0mount /dev/md0 /mnt/md0然后,把我们当前的boot分区里的所有内容复制到md0里面去

cd /boot

cp -dpRx . /mnt/md0然后修改fstab和mtab(如果能的话)

fstab:

修改/boot挂载的设备为/dev/md0

/dev/VolGroup00/LogVol00 / ext3 defaults 1 1

/dev/md0 /boot ext3 defaults 1 2

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

/dev/VolGroup00/LogVol01 swap swap defaults 0 0mtab:

同上

/dev/mapper/VolGroup00-LogVol00 / ext3 rw 0 0

proc /proc proc rw 0 0

sysfs /sys sysfs rw 0 0

devpts /dev/pts devpts rw,gid=5,mode=620 0 0

/dev/md0 /boot ext3 rw 0 0

tmpfs /dev/shm tmpfs rw 0 0

none /proc/sys/fs/binfmt_misc binfmt_misc rw 0 0

sunrpc /var/lib/nfs/rpc_pipefs rpc_pipefs rw 0 0然后我们就可以把/dev/sda1合并到md0里了

fdisk /dev/sda[root@server1 ~]# fdisk /dev/sda

The number of cylinders for this disk is set to 1305.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): <-- t (输入t)

Partition number (1-4): <-- 1 (输入1)

Hex code (type L to list codes): <-- fd (输入fd)

Changed system type of partition 1 to fd (Linux raid autodetect)

Command (m for help): <-- w (输入w)

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: Device or resource busy.

The kernel still uses the old table.

The new table will be used at the next reboot.

Syncing disks.

[root@server1 ~]#然后把sda1加到md0里:

mdadm --add /dev/md0 /dev/sda1cat /proc/mdstat应该很快就同步完了,输出大概是:

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sda1[0] sdb1[1]

104320 blocks [2/2] [UU]

md1 : active raid1 sdb2[1] sda2[0]

10377920 blocks [2/2] [UU]

unused devices: <none>

[root@server1 ~]#然后更新一下mdadm.conf

mdadm --verbose --detail -scan > /etc/mdadm.conf然后以防万一,重新建立一遍initramfs

dracut --regenerate-all -fv --mdadmconf --fstab --add=mdraid --add-driver="raid1 raid10 raid456"然后就是见证奇迹的时刻了,reboot重启。

reboot不出意外应该就设置完毕了。